The slides for the presentation that Tim Hart, Doug Snow, and myself gave jointly at the 2009 MLTI Student Conference are now online. Here's the original session description:

To understand video games and their uses, you have to analyze them — but you also have to play them. So, in this session, we will be doing both. What is it that draws kids of all ages — and adults — to games? How can games, and the lessons learned from playing them, be used in a classroom setting? Why is the recent "game builder" game Little Big Planet such a huge success — and what are its lessons for educators? How are virtual worlds defining a new learningscape? This session will offer an opportunity to explore, experience, participate, and reflect on games, gaming, and education.

Tim's slides are available from his blog, while my slides can be downloaded here. I have also uploaded the list of games we showcased in this presentation — each game listing is accompanied by a brief explanation for why that game was selected. With the exception of the Wii games, all the games are available either as downloadable demos, or for free.

My thanks to the team of students from Messalonskee HS that assisted us in this session — great work, guys.

Wolfram Alpha, the new search tool developed by Stephen Wolfram and his team, is now available for anyone to try out, and it appears to have caught the eye of the press. I've been testing it quite heavily since its release, and I have to say that the engine driving the system is pretty impressive - but don't take my word for it, Doug Lenat (of Cyc fame) also says so. It's also nice to see articles in the popular press that actually talk about AI and related fields reasonably, rather than complaining because HAL isn't a reality yet. That said, there's an aspect of Wolfram Alpha in its current state that I find rather worrisome: the company's overall approach to data.

When you read through the FAQ page on the Wolfram Alpha site, you come across a section on data that contains the following phrases:

Where does Wolfram|Alpha's data come from?

Many different sources, combined and curated by the Wolfram|Alpha team. At the bottom of each relevant results page there's a "Source information" button, which provides background sources and references.Can I find the origin of a particular piece of data?

Most of the data in Wolfram|Alpha is derived by computations, often based on multiple sources. A list of background sources and references is available via the "Source information" button at the bottom of relevant Wolfram|Alpha results pages.

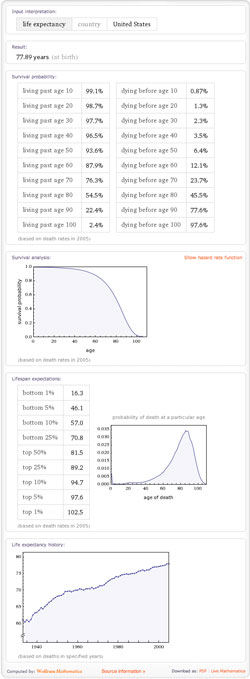

OK, sounds good - let's check it out. When I run a query on "USA Life Expectancy", I get a very nice page with results, tables, and graphs:

However, nowhere on that page am I told the actual source of the data. No problem - I'll just click on the "Source Information" link:

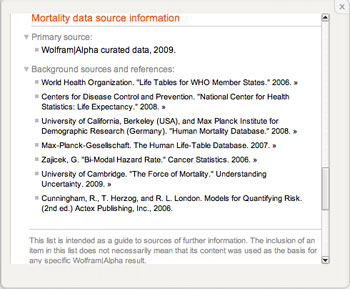

Wait a second - I see as the primary source "Wolfram|Alpha curated data, 2009", followed by four databases and three sources for models - so where did this data come from? Is it from one of the databases? a composite from some or all four? and if the latter, how was the composite constructed? OK, there's one more link at the bottom of the page - it reads "Requests by researchers for detailed information on the sources for individual Wolfram|Alpha results can be directed here." Clicking on that link, I get the following screen:

I know, it's beta time - but frankly, this is extremely discouraging. Even if this page worked, it seems to imply that detailed info about how the query results were sourced will require that a human being get back to me. By contrast, the CDC tells me exactly how its data was collected and analyzed, as do all the other data sources used by Wolfram Alpha. In other words, Wolfram Alpha has taken data that was transparent in its sourcing and analysis and made it - at least at first impression - opaque.

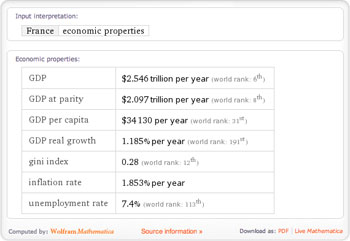

Things get worse when we get into economic data. Try the following query about basic economic data: "France Economy"

"Source Information" is even more opaque in this case, with a lengthy list of sources that includes everything from the UN and OECD to Britannica and Wikipedia, but does not include France's own governmental offices. Does this mean that the - quite reliable in my experience - French government's data is not being considered in this aggregate? Given that Wolfram Alpha claims to have "trillions of elements" of data in its system, you would think that data published by world governments would be a rather obvious source to include - even at launch. Also, what year do these numbers come from? If you assumed 2008, say, you'd be wrong - but there's no way of knowing that from this screen. To find out, you'd have to run a separate query - for instance, for the Gini Index the estimate corresponds to 2005:

This is not an obscure, nitpicky complaint: any serious user of social and economic data knows that how data was sourced, aggregated, and processed determines crucially how it can be used. Looking at the list of sources mentioned by Wolfram Alpha, I would use some for some purposes, and others for others - but I can't leave that decision in Wolfram's hands, nor would I accept a teacher or professor encouraging their students to do so. And for people inclined to say "hey, just roll with it - after all, it looks pretty good, and they seem to know what they're doing", I would remind them that the current economic situation was very largely created by people doing precisely that - putting too much trust in opaque data, and the people and algorithms that generated it.

Let me be clear: I do not in any way believe that the creators of Wolfram Alpha have any nefarious intents in how they're handling data and presenting search results. Indeed, I have nothing but the highest respect for Stephen Wolfram's intelligence and that of his team, and believe that they are indeed trying to provide people with a reliable and useful tool - but the road to decision hell is nonetheless paved with the best data intentions.

Now, all my complaints could be addressed trivially: if the "Source Information" link, instead of directing users to a generic page, revealed the exact data sources used, together with a general description of any additional processing applied, I would be perfectly happy. All the necessary info is in the database, and should be readily retrievable for any given query. As it is, I am not sanguine that this change will occur. Why? Well, reading the "Terms of Use" is a positively depressing experience in over-extension of copyright claims - for instance, Wolfram Alpha claims copyright over any plots, formulae, tables, etc. that might be generated as a result of your query. To put this in context, this would be not unlike Microsoft claiming copyright over any plots you generate using Excel. You are also forbidden from executing "systematic patterns of queries", and "systematic professional or commercial use of the website" is out of bounds. In other words, Wolfram Alpha is unusable for anything much more challenging than the occasional bar bet - I would have to warn any librarian that "systematically" using Wolfram Alpha to teach students about data sourcing might run afoul of the TOU, and regular use by a university research group would seem to be likewise forbidden. Of course, there is - you guessed it - a commercial license available as an option, although it's unclear whether this option would lift the veil of opacity I detailed above.

All of this being said, I have hopes that the situation with Wolfram Alpha will eventually change. Why? Well, Google has already made some announcements this week that seem to indicate that it is very interested in tapping the same market that Wolfram Alpha is targeting. And while Wolfram Alpha may currently have a headstart in the necessary infrastructure, Google has some pretty mean mathematical and programming chops of its own - and the cash reserves to buy as many more warm bodies as it needs. So, given that Google's business model does not rely on opacity of information sources for searches, I can see Wolfram Alpha deciding that it might prove wise to relax some of its constraints sooner, rather than later. In the meantime, the only use I can recommend of Wolfram Alpha for educators and students is to use it to get a crude first answer - and then go to its listed sources to get the real data.

The recording of the workshop I presented at last week's Pictures Sounds Numbers Words conference is now available online. Here's the original workshop description:

What do you get when you combine presentation software with a concept mapping tool, and add a bit of comic book flair to the mix? The answer: Prezi, a truly remarkable Web 2.0 tool that transforms what is meant - and what can be said - by online presentations. We'll explore Prezi hands-on, and take a look at some of the possibilities it opens up for educators. We will also see how it fits in with other concepts, such as the "infinite canvas" in webcomics, and what this means for future work in media literacy and creation.

I also strongly recommend checking the recordings for one of the other workshops - excellent presenters, and a great range of topics.