Educational gaming continues to make headway, with recent news items focusing on the creation of a public school centered around it, the discussion of its role in civics education, and how it can be used as a way of improving STEM education. My own podcast series, Game and Learn, was designed to provide educators at all levels with a self-contained introduction to the core knowledge needed to use educational gaming effectively. That said, one aspect of educational gaming still frequently goes unaddressed: the fact that many educators lack personal experience with actual gameplay.

It is not uncommon for educational gaming projects to be undertaken by instructors who have either never played a videogame, or are at most familiar with one or two examples in narrowly defined subgenres. This can lead to a range of more-or-less serious problems, ranging from unrealistic expectations about what can be accomplished, to a lack of appreciation for features and nuances of games that can be effectively exploited in learning scenarios.

Until recently, remedying this deficit was nontrivial: the task of assembling a reasonably complete game collection could be quite daunting, given the cost of the games themselves, the need for dedicated consoles to play game genres not well-exemplified on personal computers, and the overall lack of a list of “games that matter” to help guide the process. However, over the past year, the economics of game publishing on Apple’s iTunes Store have changed this situation dramatically. The games are inexpensive, cover all key genres, and are playable on all iOS devices, from the basic iPod Touch, through the most advanced iPhone or iPad.

That only leaves the need for a game list as the sole unaddressed point – which is where this blog post comes in. I have spent some time over the past year testing games (I know, tough job, poor me) so as to compile such a list. My criteria were:

- All key core genres had to be covered, not just the ones traditionally used in educational gaming. Much of the power of games in learning can only be understood once the peculiarities of each genre gave been experienced. I’ve never seen a good educational game in the sports genre, for instance – but the way in which sports games present players with complex sequence-based tactical choices on the fly that are nonetheless learnable and manageable can definitely inform educational game use and design.

- The games should all be playable by beginners – but lead to expertise applicable to more difficult games. For instance, R-Type is quite likely the best Shoot ‘Em Up ever designed – but it is also punishingly difficult. Platypus is much more accessible, but still illustrates most of the key mechanics of the genre.

- The games should all demonstrate key aspects of their genre in the early stages of the game. While playing any given game through to its conclusion might be enjoyable, doing so for every game on the list is not likely to be a worthwhile pursuit for an instructor. Some role-playing games take hours to really “get started”; by contrast, Vay demonstrates all core mechanics in the first half-hour of play.

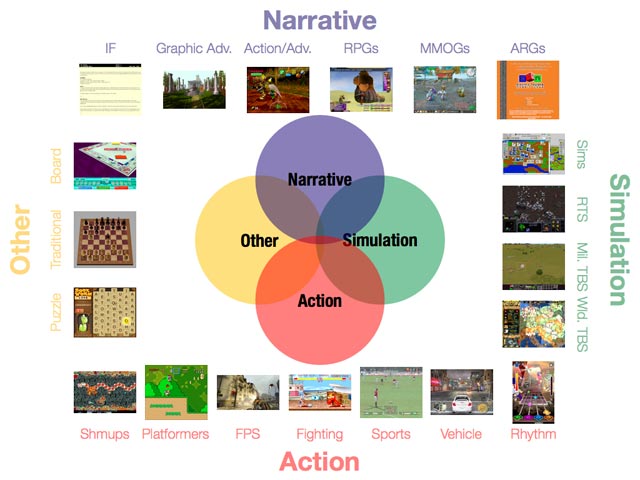

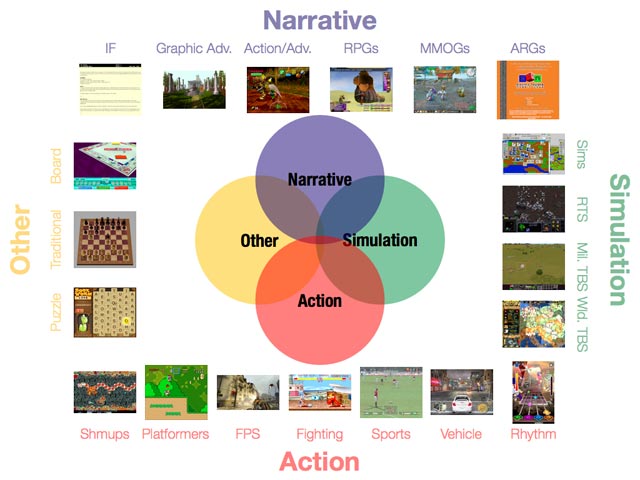

I have used the list of genres discussed in episode #3 of my podcast series:

For each genre, I have selected two games, one to act as an intro, and a second one for further exploration, or as an alternative to the first choice. Wherever available, I have linked to free “try before you buy” versions – in many cases, they suffice to provide a good intro to the genre, and some can be upgraded to full game versions if so desired. I have played all the games, and most of them should play fine on all iOS devices, with the possible exception of the oldest iPod Touch. If your device “hiccups” when playing a game, you might want to reset it, following the instructions on Apple’s site.

If you’re interested in looking for more games, community sites can be a great source of info – I have found discussions and reviews on Touch Arcade to be particularly informative. Finally, you may find some of my podcasts useful as a way to learn what to look for in these games – in particular, podcasts 3 and 4 are specially relevant here:

- Podcast #3, “A Menagerie of Genres”, discusses in greater detail the classification of games used here.

- Podcast #4, “Games and Learning”, discusses and summarizes James Gee’s research into the components of learning present in videogames. It’s worthwhile keeping the list of elements identified by Gee at hand when you’re playing through these games, and seeing how many of the items he identifies you can find in the game you’re playing.

Enough talking – it’s time to have some fun.

Narrative Games:

Text Adventure: Frotz

Text Adventure: Frotz

Frotz provides an interface to play all the great non-commercial classics of Interactive Fiction (IF). Text structures the gaming experience here: players are presented with descriptions of environments and events, and respond by typing in text themselves. An excellent IF game to begin with is Emily Short’s Bronze – it was designed as an introduction to the genre for both players and game designers. It is worthwhile studying the progression of puzzles, and their integration throughout the game – there are multiple lessons to be learned here.

Also see: it is almost impossible to pick a single follow-up game here, but particularly good choices are Graham Nelson’s Jigsaw, for its complex, deep puzzles; Dan Schmidt’s For A Change, for its unusual use of language; and Adam Cadre’s Photopia, for its approach to storytelling. Also try the “Browse IFDB” button – it’ll take you to more gems of the genre, such as Dan Shiovitz’s logic puzzler Bad Machine.

Graphic Adventure: Myst (Full version)

Graphic Adventure: Myst (Full version)

While the focus here is still solidly on storytelling and associated puzzles, images, rather than text, guide the experience. Very few games have succeeded as thoroughly as Myst in creating an immersive experience, where the mood of the landscape perfectly matches – and defines – the puzzles. Try comparing Myst‘s puzzles to those from one of the IF exemplars – what similarities/differences do you see?

Also see: Mystery of Monkey Island: Special Edition (Full version)

A very different game from Myst – dialogue, which is near-absent from Myst, plays a central role here. Also worthwhile studying as one of the few examples of non-IF games that manage to make humor (mostly) work for them.

Action-Adventure: Across Age (Full version)

Action-Adventure: Across Age (Full version)

The action-adventure genre is, by definition, a hybrid one. Some games lean more towards the action side of things (i.e., are almost platformers), while others are more focused on puzzles and character attribute development (i.e., akin to a Role-Playing Game). Across Age is an exemplar of the latter – relatively minimal finger agility is required. Examine how players are encouraged to explore and develop skills – and how those skills are used later in the game.

Also see: James Cameron’s Avatar (Full version)

This game leans towards the action side of the genre. The game allows for relatively little exploration, i.e. it is mostly linear in its presentation of challenges, but nonetheless provides a decent intro to the shift in puzzle construction demanded by the action perspective.

Role-Playing Game: Vay

Role-Playing Game: Vay

RPGs are the descendants of pen-and-paper games like Dungeons and Dragons, and as such tend to focus on the interaction between narrative setting and roles acted out by players who must complete a set of assigned tasks to develop their characters. As an introduction to the genre, Vay is a perfect choice: a decent (if somewhat overwrought) story, a gentle learning curve for its character attribute development challenges, and enemy fights that are not too repetitive if you play along at the “just right” pace. Not the prettiest game around – but a good beginner’s RPG nonetheless.

Also see: PuzzleQuest

An excellent example of the possibilities that open up when you combine genres – in this case, mixing an RPG with a Bejeweled-style match-n puzzle. Worth using as a point of departure for thinking about possibilities for non-traditional challenges in educational RPGs.

Massively Multiplayer Online Game: Pocket Legends

Massively Multiplayer Online Game: Pocket Legends

In MMOGs, the challenges and worlds of the RPG are shared with multiple other players, creating new possibilities for player collaboration, competition, and other forms of interaction. Pocket Legends plays upon fantasy themes, and as such is a mini-version of the justly famous World of Warcraft. In addition to studying these interactions, you may also want to think about the storytelling themes embedded within the game, and how they could be used as a point of departure for student-driven narratives.

Also see: Outer Empires

Outer Empires is a more cerebral game than Pocket Legends, focusing on themes of interstellar travel, trading, and conflict. As such, it feels like a scaled-down version of the much-larger computer MMOG Eve Online. As food for thought, consider how mathematical models, and student-driven exploration could be embedded within similar worlds. Note that as of the time of this writing, this game requires a device that can be updated to iOS 4.2 or later, so it cannot be played on first-generation devices.

Simulation Games:

Sims: Virtual City (Full version)

Sims: Virtual City (Full version)

Sims (short for simulation games) create machines designed to simulate systems in the real world to varying degrees of accuracy, and embed these machines in gaming contexts. While the well-known SimCity is available on iOS, Virtual City is overall better scaffolded as an introduction to the intricacies of running a city simulator. Take a look at how missions build up knowledge about the tasks ahead, and make sure to explore the sandbox mode for free-form creation.

Also see: The Sims 3 World Adventures

The Sims series has specialized in creating worlds for players to explore the outcomes of interpersonal interactions, as played out by their doll-like characters. While the focus of the series has not been on realism, it nonetheless creates an interesting sandbox for players to explore “what if” fantasies and narratives about the world.

Real-Time Strategy Games: Warfare Incorporated

Real-Time Strategy Games: Warfare Incorporated

RTS games put players in contexts where they have to make quick decisions in real time about resources and their use that will affect the outcome of a conflict. The interaction between intrinsic game piece parameters and terrain-based properties is a key component of this process, and is equally important in all other types of strategy games. Warfare Incorporated follows the standard rules for the genre, putting the player in charge of making progressively more complex tactical decisions affecting a series of military battles. The scaffolding of this progression is worth studying, as is the subjective evolution of your own analytical processes when under time pressure.

Also see: The Settlers (Full version)

A slower, but more complex RTS game, incorporating deeper strategy elements, akin to the turn-based Civilization series. Worthwhile studying for how these more complex elements modify the game experience.

Military Turn-Based Strategy Games: Highborn (Full version)

Military Turn-Based Strategy Games: Highborn (Full version)

Military TBS games focus on tactical decisions in the context of a battlefield, but unlike RTS games, they allow the player as much time as they want to decide what to do next. Highborn is an excellent introduction to the genre, mixing good tutorial levels with humor throughout the game. It is particularly worthwhile contrasting the subjective game experience with that provided by RTS games.

Also see: UniWar

UniWar provides, in addition to an individual TBS experience, the possibility of playing against other players. Particularly worthwhile for comparing the experience of playing against a game engine to that of playing against other human players, and the pros/cons of the respective experiences.

World Turn-Based Strategy Games: Civilization Revolution (Full version)

World Turn-Based Strategy Games: Civilization Revolution (Full version)

World TBS games focus on the “big picture”, i.e., the strategy involved in building up a large-scale civilization (including resources and decisions beyond the military domain), rather than the tactical decisions of multiple battles. The Civilization series is the clear leader in this genre, and Civilization Revolution is a particularly accessible game in the series. While it makes no claims to historical accuracy, it can be an excellent tool to get students to think about the complex web of factors that are involved in historical processes. The in-game tutorials are good, but some players would like additional resources for a game this rich – for them, I recommend the supplementary materials available online.

Also see: nothing here yet – one can only hope that games like Europa Universalis, that try to include a measure of historical accuracy within the genre, will make it to the iOS platform sometime soon.

Action Games:

Shoot ‘Em Ups: Platypus – Squishy Shoot-em-up (Full version)

Shoot ‘Em Ups: Platypus – Squishy Shoot-em-up (Full version)

In shmups, players are presented with waves of enemies that they must pilot a vehicle through, eliminating them while avoiding attacks. These games favor even faster decision-making than RTS games, and tactical thought becomes a required near-intuitive reflex – good shmups do not reward thoughtless button mashing. Platypus is a whimsical shmup that is accessible to beginners, yet challenging enough for them to explore the full range of what the genre can provide. See how your own reflexive thinking evolves throughout the game, and consider how this might help students develop a feel for otherwise hard-to-visualize physical scenarios such as those involved in the electromagnetic educational game SuperCharged!

Also see: Space Invaders Infinity Gene (Full version)

A shmup that doubles as an intelligent reflection on the history of videogames in general, and shmups in particular. Particularly notable for the way in which gameplay evolves to reflect this history alongside player skills.

Platformers: Giana Sisters (Full version)

Platformers: Giana Sisters (Full version)

Courtesy of the success of games like Super Mario Brothers, everybody knows the basic formula for the platformer: a character runs through a terrain littered with obstacles, picking up goodies and defeating enemies along the way. Giana Sisters is a superb tribute to classic platformers, with a gentle learning curve for newcomers. The game’s teaching style is particularly worth exploring here – pay special attention to how a new skill is introduced, then rehearsed, then used in progressively more complex scenarios in combination with other skills. It is also worthwhile studying how the game provides feedback to the player as to their relative level of skill in completing tasks – and thinking about how similar tactics might be used for formative assessment in the classroom.

Also see: :Shift: (Full version)

What do you get when you combine a platformer with a puzzle game that requires a fundamental rethinking of what is meant by terrain? One answer: :Shift:, a puzzle platformer that draws upon the player’s 2D figure/ground reversal skills for success. Until Portal is brought to iOS devices, this may well stand as the best example available of how a platformer can help reshape thinking about spatial properties.

First-Person Shooters: N.O.V.A. – Near Orbit Vanguard Alliance (Full version)

First-Person Shooters: N.O.V.A. – Near Orbit Vanguard Alliance (Full version)

FPS are designed to provide an immersive experience for the player, by matching their viewpoint to the viewpoint of what their game character would see around them. As such, they typically generate a “you are there” thrill ride, similar to a good horror film. N.O.V.A. is fairly typical of the genre – weak story, but good feeling for the creation of a believable surrounding environment. Pay special attention to how a world is constructed around the player that encourages the suspension of disbelief, and full engagement with the environment.

Also see: Archetype

The FPS experience in N.O.V.A. focuses on the individual player; by contrast, Archetype focuses on the multiple possibilities for player-team interactions. The modes in which teams come together, and create strategies for success is worthwhile exploring here.

Fighting Games: Blades of Fury (Full version)

Fighting Games: Blades of Fury (Full version)

Fighting games step back from the immersive perspective of the FPS, and focus the camera at a mid-range third-person perspective. What is lost in immersion is gained in the possibilities for fast, complex interaction in fights that are far more complex than simple boxing or karate matches. Blades of Fury is a good, if somewhat generic, exemplar of the 3D variety of this type of game. Pay no attention to the very silly and overwrought story; instead, focus on how the game develops player skills for choosing from a complex array of possible attack and defense moves on the fly, with split-second timing.

Also see: Street Fighter II (included in Capcom Arcade)

A classic of the genre – and one that exemplifies how reducing complexity in one part of the game domain allows for greater complexity in other aspects of the game. In this case, going from the 3D terrain in Blades of Fury to a simple side-scrolling 2D landscape allows for a much greater player challenge in choosing and timing the use of particular battle skills.

Sports Games: X2 Soccer 10/11 (Full version)

Sports Games: X2 Soccer 10/11 (Full version)

The simple sports games (e.g., bowling, tennis) included with systems such as the Nintendo Wii are easy to get into, and a lot of fun to play. However, they do little to translate the more subtle aspects of the sports experience, and in particular those components that relate to team play. In X2 Soccer 10/11, you may never feel like you’re really kicking a soccer ball – but the team tactics and strategic components of the sport are faithfully reproduced. Of special interest: how the game design allows users to control multiple players onscreen, without losing track of what is going on in the game overall, while retaining the swift game dynamics characteristic of soccer.

Also see: Baseball Superstars 2010 (Full version)

What would happen if you included an RPG within a sports game? Baseball Superstars 2010 is one possible result. The interaction between the two game genres is particularly worthy of study here – even though this specific combo is unlikely to ever occur in a good educational game, the design choices made to produce a successful game can help illuminate the design of other possible combinations.

Vehicle Games: Real Racing (Full version)

Vehicle Games: Real Racing (Full version)

Of all game genres, vehicle games depend most crucially upon immersion and acceptable verisimilitude to the physical world to obtain player acceptance. Some vehicle games (usually called “arcade-style”) strive for just enough realism to make the experience enjoyable; others try to include as much of the vehicle physics as possible. Real Racing is an example of the latter approach – pay attention to how the game manages to draw you into its world, even though there’s a world of difference between holding an iPhone in your hands and sitting in the cockpit of a race car. Also, study how the game quickly guides you up a non-trivial difficulty ladder, while providing reasonable rewards at each stage.

Also see: Jet Car Stunts (Full version)

Another hybrid – in this case, one generated by the fusion of an arcade-style racer with a platformer. As before, the key topic here is to study the details that make the fusion work; pay special attention to how two genres with very different typical difficulty and learning curves can be reconciled in a single game.

Rhythm Games: DanceDanceRevolution S+ (US)

Rhythm Games: DanceDanceRevolution S+ (US)

Rhythm games focus on the player’s capacity to reproduce different aspects of music presented to them, typically by stepping on a dance pad, interacting with a musical instrument-like controller, or – as in this case – tapping on the screen. Dance Dance Revolution is a classic of the genre, and this game does a good job of scaling down the experience from the dance floor to a small device. Of all game genres, this one relies particularly heavily on repeated practice and rehearsal of game sequences over and over again – so it’s well worth studying how the game keeps this from becoming boring.

Also see: Thumpies (Full version)

A perfect study in how small changes can add up to a very different game experience. Thumpies, like DanceDanceRevolution S+, invites players to keep up with the rhythm of pre-scripted music by tapping onscreen – but key changes in presentation, use of the screen tapping space, and musical development make for a radically different flow and feel to game play.

Other Games:

Puzzle Games: Zen Bound 2 Universal

Puzzle Games: Zen Bound 2 Universal

There are hundreds of puzzle games available for iOS devices, but the very best ones are those that could not have existed in the world of pencil and paper. Zen Bound 2 proposes a deceptively simple challenge: how efficiently can you wrap an irregularly shaped object in a length of string? The game taxes both tactical planning and visual 3D thinking, while making creative use of the device – literally asking the player to roll it in space to solve the puzzle. A wonderful game that should help trigger creative and original thought about what educational games might look like.

Also see: Lumines – Touch Fusion (Full version)

One more example of the challenges and solutions that need to be invoked when two game genres meet. In this case, a Tetris-type puzzle is mixed with a slow rhythm game: particularly worth studying are its unique differences that are reflected in neither of its two parent sources.

Traditional Games: Shredder Chess (Full version)

Traditional Games: Shredder Chess (Full version)

Chess, go, backgammon, and poker are all worthy of study as particularly evolved examples of games that have become streamlined over time to their core essentials. In the computer domain, good examples of software that plays these games should not just play the games well, but also demonstrate good tutorial capabilities, good tools for showing players the deep structure of game positions, and interesting ways of adapting to player level and skill. Shredder Chess does an excellent job of accomplishing all these goals, and is specially valuable to educators as an exemplar of how to provide adaptive feedback and formative assessment.

Also see: SmartGo Pro (Full version)

While no go program has yet reached a level of play expertise comparable to the best chess programs, SmartGo Pro does a good job of compensating for this by providing players with a complete set of tools for learning, including problems and past games for analysis. A good example of a comprehensive approach in games that seek to educate the player.

Board Games: Reiner Knizia’s Samurai

Board Games: Reiner Knizia’s Samurai

In recent years, so-called “Eurogames” have transformed the board game landscape, with approaches that are both original and easily learned, while providing for excellent depth and complexity in game play. Settlers of Catan and Carcassonne are two well-known examples, but the lesser-known Samurai may well outshine them all in terms of providing a game that can not only be readily learned and enjoyed, but also easily broken down into its component parts by game scholars interested in seeing what makes the game tick. This particular computer incarnation of the game excels in its tutorial capabilities, and supplies an engine that is both a reasonably challenging opponent, but also one with flaws that can lead to a deeper understanding of the underlying mechanics of the game.

Also see: Monopoly

It is worthwhile examining how a very well-known board game classic is translated to the digital domain as a way of better understanding what makes games work in general. Monopoly is, of course, a classic that nearly everyone will have played at some time, and as such is ideally suited to this exercise.

This blog post is dedicated to the memory of my dog, Freya, who taught me more about play than I can ever acknowledge.